How to query Azure APIs using PowerShell

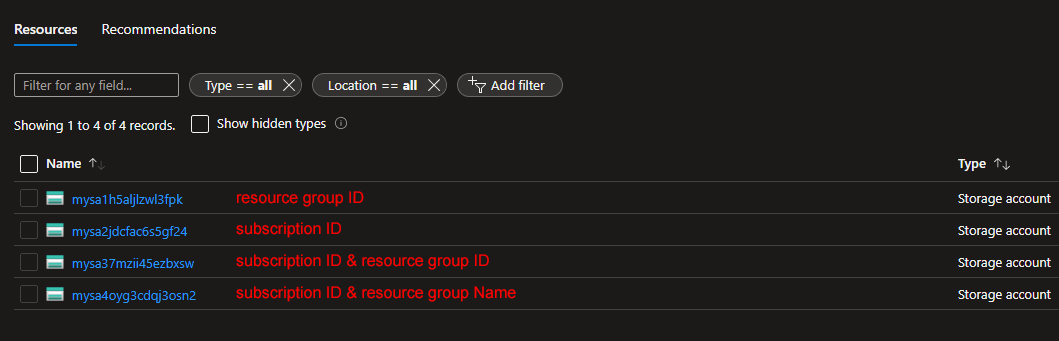

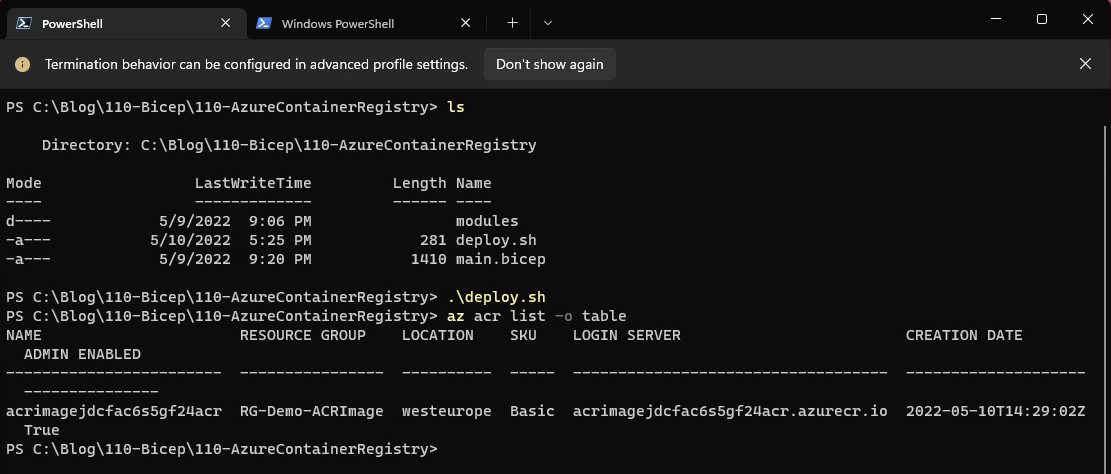

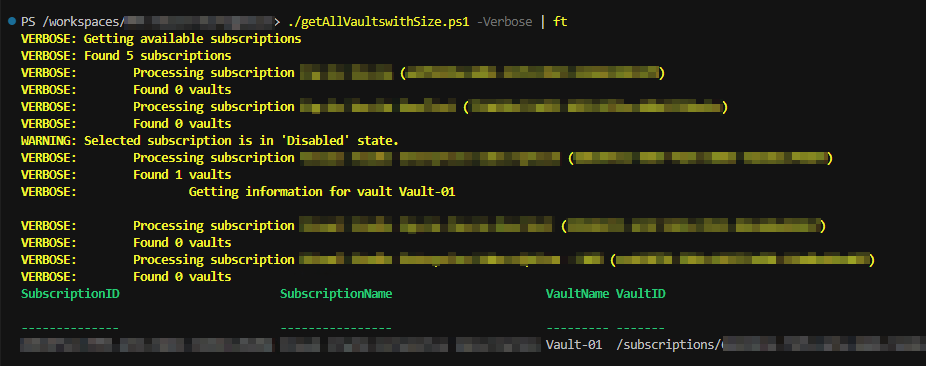

Azure REST APIs are a great way to manage your Azure resources. You can use them to create, update, delete, and list resources, as well as get information about them. In many cases, the tools provided by Microsoft - like Azure CLI and Azure PowerShell, do not provide the functionality you need and thus you have to turn to the APIs. As an example, we're going to create a script that will get the size of the storage consumed by Recovery Services Vaults, that is available in Azure Portal but not in the command line tools. So If you have a lot of vaults and you need the size of the storage behind them, you need to automate the process using the Azure APIs. Below is the entire script, but don't dive right in, let's take it a step at a time! 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 8...