Publishing AKS services to private networks using NGINX

In one of the previous posts, we used NGINX as the ingress controller in Azure Kubernetes Service, to publish applications and services running in the cluster (article is available here). In that deployment, NGINX was using a public IP for the ingress service, something that may not be suitable for services that should be kept private or protected by another solution. In today's post, we're going to deploy NGINX in a way that the ingress service uses a private IP, from the same vNet that the cluster is built on top.

Following the usual steps of logging in and selecting the subscription to use in Azure CLI, you just have to execute the deployment script that will deploy the main.bicep file and all of its sub-resources to create the AKS platform on Azure. The next step would be to get the credentials for the cluster using the az aks get-credentials command, as shown in the getAKSCredentials.sh script, in order to connect with using the kubectl tool.

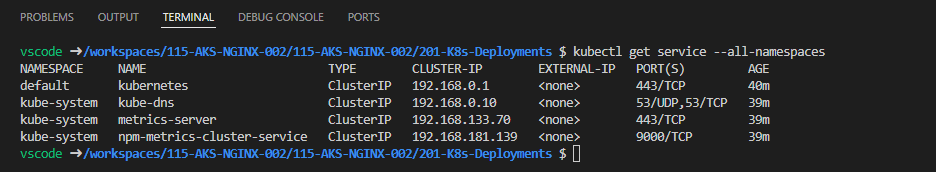

If you get the services that are created by default, you should only have services of type ClusterIP, like below:

Let's also take a look at the resources that have been created in the managed cluster resource group:

Apart from the scale sets and managed identities, we have an Azure Load Balancer that is used to direct network traffic in and out of the cluster, and has been assigned the above public IP. To publish services to private networks, we're going to use another instance of Azure Load Balancer, connected to the same vNet with the AKS cluster.

Before moving on to the deployments inside the AKS cluster, we need to grant contributor level access to the cluster's managed identity on the virtual network we're using. This is because we want AKS to be able to update the vNet and create a new Azure Load Balancer connected to it.

The below Azure CLI commands will do exactly that, just make sure you update the names of the resources and resource groups:

spID=$(az resource list -n aks -g RG-NGINX-AKS --query [*].identity.principalId --out tsv) scope=$(az resource list -n vnet -g RG-NGINX-Networking --query [*].id --out tsv) az role assignment create --assignee $spID --role 'Contributor' --scope $scope

Moving on to the deployment of NGINX, we are going to use the default Helm chart to install the ingress controller, but with a few changes.

First, we are going to customize the names of the classes in order to be able to differentiate from other ingress controllers (if required). In this case I've used internal-nginx and internal-ingress-nginx for the controller and ingress classes respectively.

Second, we are going to add some annotations to the NGINX service, to make it use an internal load balancer and a private IP. The last of the annotations instructs AKS to assign a private IP to the service from a specific subnet of the vNet. If you omit it, the service will get an IP from the subnet associated with the application pool that the pods are running on.

The below screen, taken from the 2-helm.sh file, shows the parameters of the Helm chart:

If you take a closer look at the resources in the managed cluster resource group, you'll notice that a new Azure Load Balancer has been created, and the IP of the NGINX service has been assigned to it:

If you deploy an application and create an ingress, its external IP will be the same with the one assigned to the NGINX service:You should be able to reach the app from a machine running on Azure, just make sure you use the right host header for the ingress to work:

That's it, you have successfully published an app running in AKS to Azure private networks, using NGINX!

Although the use of an ingress controller is a best practice when publishing applications and services, you may have to publish a service directly from the application namespace. The files in the 115-AKS-NGINX-002/201-K8s-Deployments/301-K8s-LoadBalancerService-001 and 115-AKS-NGINX-002/201-K8s-Deployments/302-K8s-LoadBalancerServiceHardcodedIP-001 show how to create a LoadBalancer service, even with a hardcoded IP.

All the files in this demo are available in my Github repo over here. Happy coding!