Running Multiple NGINX Ingress Controllers in AKS

In some of the previous articles of this blog, we went through the process of installing NGINX as the Ingress Controller in AKS clusters. Either for applications that should be available directly from the public internet, or for applications that should only be accessed from networks considered internal.

In the majority of the cases, however, and given that an AKS cluster is an environment that is designed to host multiple applications and provide economy at scale, additional ingress controllers may be required.

In this post, we're going to go through the process of deploying an additional NGINX ingress controller that is going to be used for internal applications. The below diagram depicts the desired outcome:

The Angular application is published via the public NGINX through the Azure Load Balancer that has been assigned a public IP. The .NET app is published by a different set of NGINX pods that are deployed in a different namespace and their ingress controller service is connected to another Azure Load Balancer that uses private IPs.

Let's see it in action! As always, the first step is to deploy the necessary resources on Azure. Clone the repository and use the deploy.sh script to start the deployment. In case you need to adjust the names to your environment, edit the script or the main.bicep file.

When the deployment finishes, there should be four new resource groups in your subscription:

The networking group contains all the networking-related resources like the vNet and the bastion host, the VM contains a virtual machine to perform tests and manage the cluster, and the AKS and AKS-MC, the AKS resource and its managed cluster.Now that we have completed the deployment of the two NGINX ingress controllers, it's time to deploy a couple of applications and publish them using ingress resources.

The 201-K8s-App-Public-001 and 211-K8s-App-Internal-001 folders contain the necessary files to deploy two applications, one that is going to be using the public-facing NGINX, and one for the private NGINX. The application that is deployed is essentially the same, the only differences are the namespace and the class of the ingress to use.

For the public application, the ingress controller is declared to be of type nginx, which is the default ingress type that was installed as part of the default installation of the first ingress controller.

This class will result in the ingress being processed by the public NGINX controller.

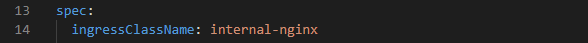

The ingress class of the second application's ingress resource is set to internal-nginx, thus the ingress resource will be processed by the second NGINX controller.

If you take a closer look at the Helm scripts parameters for the installation of the second NGINX, you'll notice that internal-nginx is the value for the ingress class resource name!

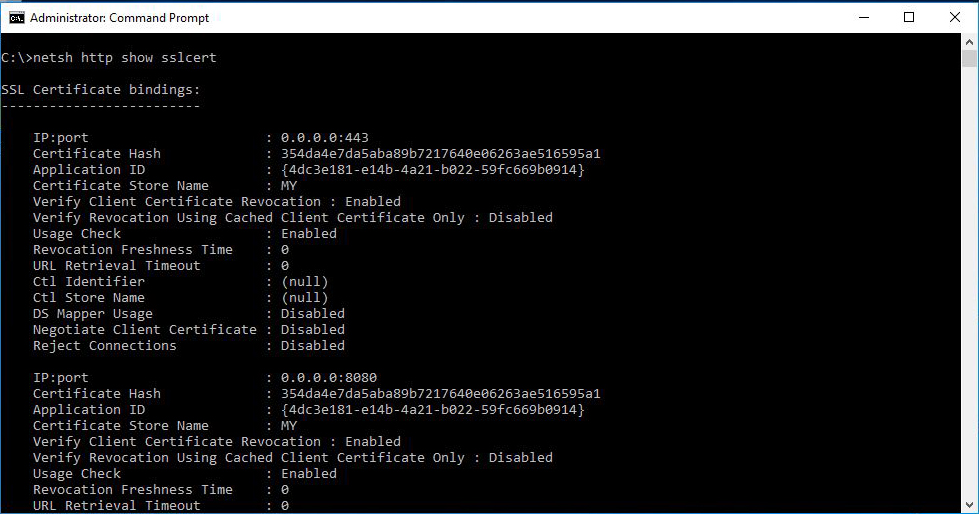

The result of the above deployments would be two NGINX services, one in each NGINX namespace:

As expected, the service of the default NGINX has been assigned a public IP and the service of the internal NGINX has been assigned an IP from the specific vNet on Azure.

Moving on to the ingress resources - the way that applications are exposed outside the AKS cluster, we have two ingress resources, one for each application:

The configuration of the ingresses seems to be right, given that the names of the classes and IP addresses are configured correctly. If for any reason your second ingress is not getting an IP, make sure you have assigned the permissions to the AKS cluster on the vNet and also allow some time to pass.

To verify that the ingresses work as expected, you may use the management virtual machine and bastion host that are deployed as part of the Bicep template to access each application:

Things are looking good, each application is coming from the respective namespace (the page shows the OS name of the server that is starting with "app" for one app and "app2" for the other).

Well, this is how you deploy multiple NGINX ingress controllers in the same Kubernetes cluster!

The two posts below will help you understand more about the role of NGINX in AKS:

- Using NGINX and Ingress Controller on Azure Kubernetes Service (AKS)

- Publishing AKS services to private networks using NGINX

All of the files used in this demo are available on my Github repository over here.

Happy coding!